gstreamer官方源代码中有一个基于webrtc插件实现音视频通话的开源项目,下面介绍在Ubuntu系统中如何搭建环境并使用。

一、环境搭建

1.安装依赖库

这里省略gstreamer安装,直接安装使用webrtcbin插件使用的相关库,参考官网。系统版本建议高于ubuntu18.04。

首先安装如下相关依赖库。

sudo apt-get install -y gstreamer1.0-tools gstreamer1.0-nice gstreamer1.0-plugins-bad gstreamer1.0-plugins-ugly gstreamer1.0-plugins-good libgstreamer1.0-dev git libglib2.0-dev libgstreamer-plugins-bad1.0-dev libsoup2.4-dev libjson-glib-devgstreamer项目编译官方建议使用meson和ninja。参考官方。

sudo apt-get install meson ninja-build如果想使用虚拟环境编译运行,官方推荐使用hotdoc,安装使用参考官方网页。

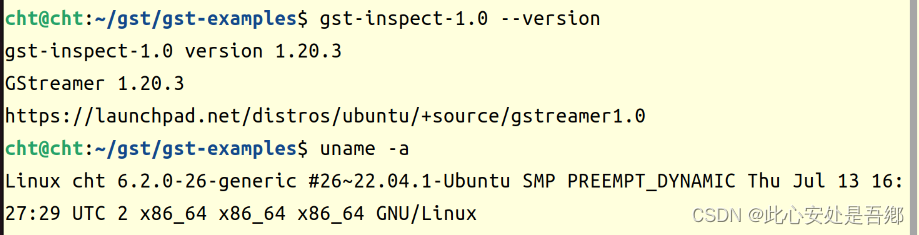

我这里使用的系统是Ubuntu22.04,gst版本为1.20。

2.编译运行

gst-webrtc开源项目在gst官方源码gst-examples目录下。下载对应版本压缩包,或者直接git克隆。

cd /path/gst-examplesmeson build环境没问题会显示

The Meson build systemVersion: 1.2.1Source dir: /home/cht/gst/gst-examplesBuild dir: /home/cht/gst/gst-examples/reconfigureBuild type: native buildProject name: gst-examplesProject version: 1.19.2C compiler for the host machine: cc (gcc 11.4.0 "cc (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0")C linker for the host machine: cc ld.bfd 2.38Host machine cpu family: x86_64Host machine cpu: x86_64Library m found: YESFound pkg-config: /usr/bin/pkg-config (0.29.2)Run-time dependency glib-2.0 found: YES 2.72.4Run-time dependency gio-2.0 found: YES 2.72.4Run-time dependency gobject-2.0 found: YES 2.72.4Run-time dependency gmodule-2.0 found: YES 2.72.4Run-time dependency gstreamer-1.0 found: YES 1.20.3Run-time dependency gstreamer-play-1.0 found: YES 1.20.3Run-time dependency gstreamer-tag-1.0 found: YES 1.20.1Run-time dependency gstreamer-webrtc-1.0 found: YES 1.20.3Run-time dependency gstreamer-sdp-1.0 found: YES 1.20.1Run-time dependency gstreamer-rtp-1.0 found: YES 1.20.1Dependency gstreamer-play-1.0 found: YES 1.20.3 (cached)Found CMake: /usr/bin/cmake (3.22.1)Run-time dependency gtk+-3.0 found: NO (tried pkgconfig and cmake)Run-time dependency x11 found: YES 1.7.5Dependency gstreamer-sdp-1.0 found: YES 1.20.1 (cached)Run-time dependency libsoup-2.4 found: YES 2.74.2Run-time dependency json-glib-1.0 found: YES 1.6.6Program openssl found: YES (/usr/bin/openssl)Program generate_cert.sh found: YES (/home/cht/gst/gst-examples/webrtc/signalling/generate_cert.sh)Program configure_test_check.py found: YES (/usr/bin/python3 /home/cht/gst/gst-examples/webrtc/check/configure_test_check.py)WARNING: You should add the boolean check kwarg to the run_command call. It currently defaults to false, but it will default to true in future releases of meson. See also: https://github.com/mesonbuild/meson/issues/9300Build targets in project: 7Found ninja-1.11.1.git.kitware.jobserver-1 at /home/cht/.local/bin/ninjaWARNING: Running the setup command as `meson [options]` instead of `meson setup [options]` is ambiguous and deprecated.会检查编译前相关依赖环境是否准备完毕。可以看到webrtc项目只更新到了1.19。如果是使用低版本gst 1.18 meson ninja编译后运行可能会提示缺少liblice相关插件库。需要下载https://gitlab.freedesktop.org/libnice/libnice库手动编译。还是使用meson和ninja。编译完成 将libgstnice.so插件库make install到系统默认的gstreamer插件路径/lib/x86_64-linux-gnu/gstreamer-1.0下。

meson成功直接编译然后运行

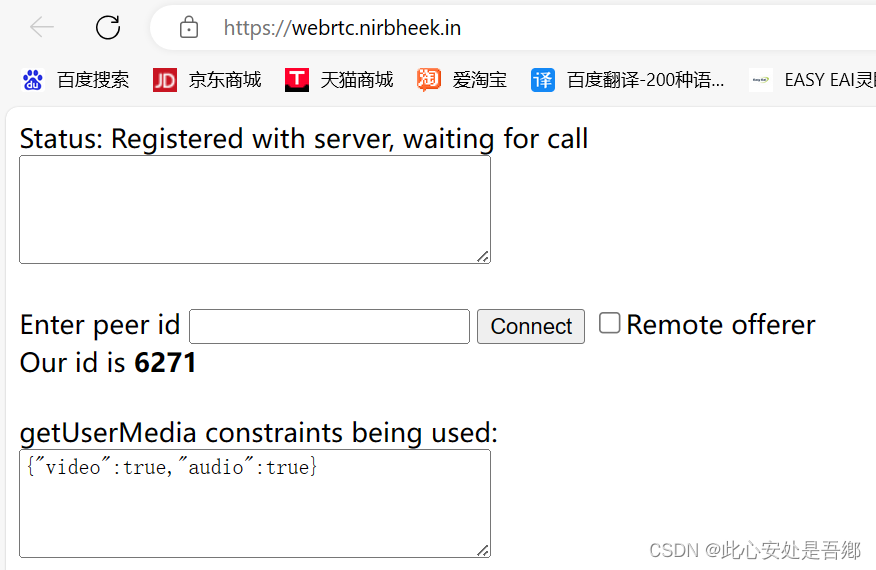

ninja -C buildcd gst-examples/build/webrtc/sendrecv/gst./webrtc-sendrecv --peer-id=xxxxpeer-id由https://webrtc.nirbheek.in网页生成的一个随机数字。网页如下图

运行webrtc-sendrecv使用peer-id需要远程提供,使用our-id可以让远程来主动连接。

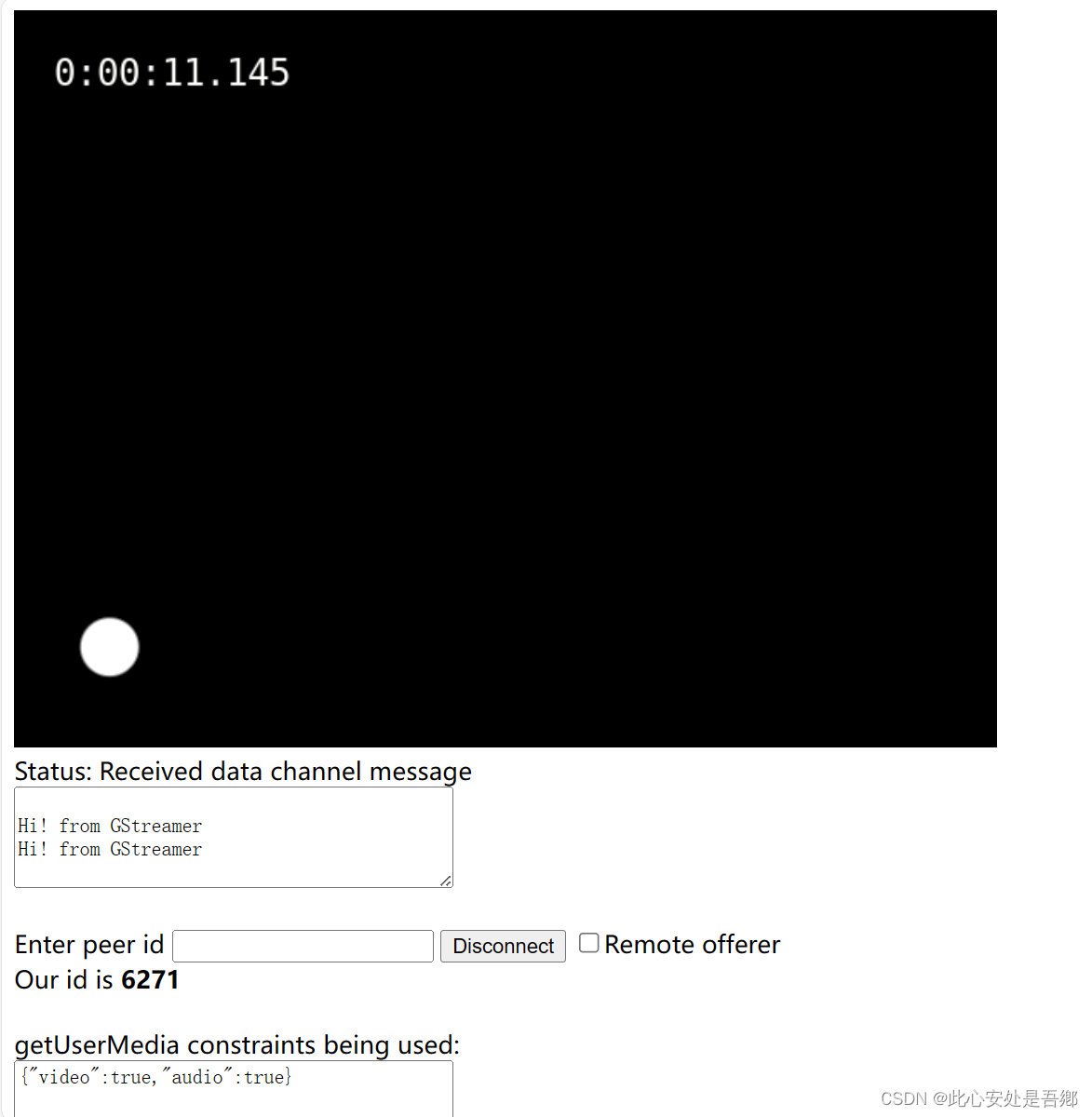

运行成功后网页端显示,另一端推流的图像为gst中videotestsrc产生,为了方便演示,还叠加了一个秒表计时器timeoverlay。音频在声卡类型不清楚的情况下可以使用autoaudiosrc来采集。

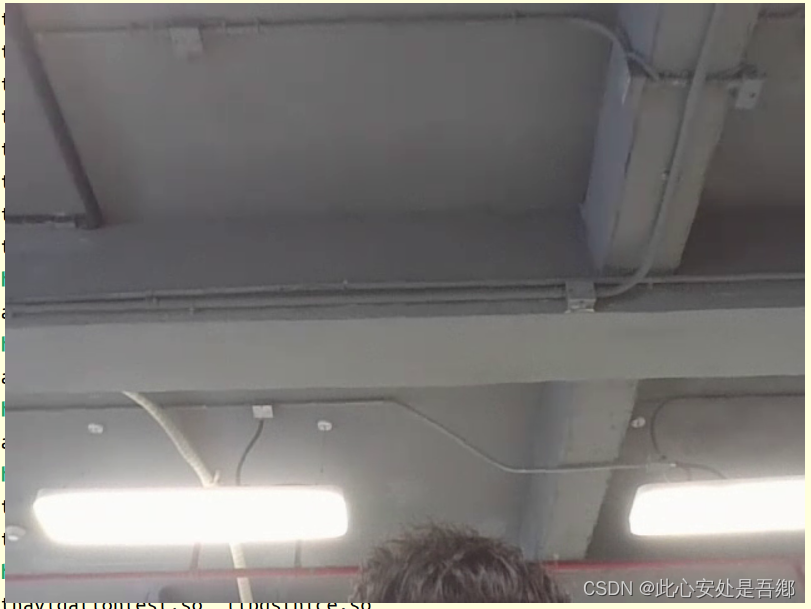

网页这边默认推流的为电脑自带的摄像头采集的视频和麦克风采集的音频。Ubuntu显示画面如下

这样在gstreamer中使用webrtcbin插件简易的音视频p2p对讲实现。

二、gstreamer采集和播放的源码分析

1.采集

这里就不分析webrtc的流程了。

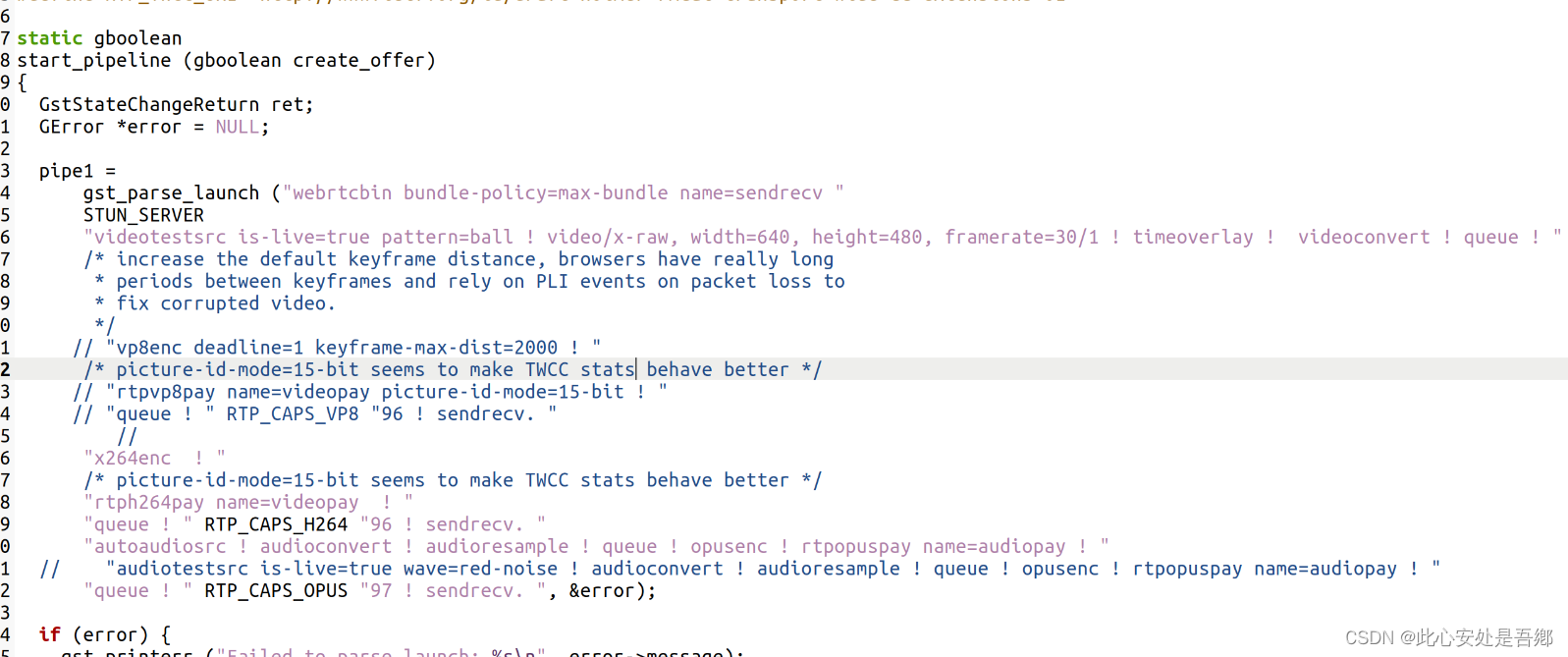

采集部分在start_pipeline中

这里对比原项目有所改动,原项目使用的vp8软编码方式以及audiotestsrc产生的噪声,由于很多平台都支持h264硬编码所以改了编码方式,例如瑞芯微平台使用的mpph264enc。需要添加一个宏定义

#define RTP_CAPS_H264 "application/x-rtp,media=video,encoding-name=H264,payload="2.播放

static voidhandle_media_stream (GstPad * pad, GstElement * pipe, const char *convert_name, const char *sink_name){ GstPad *qpad; GstElement *q, *conv, *resample, *sink; GstPadLinkReturn ret; gst_println ("Trying to handle stream with %s ! %s", convert_name, sink_name); q = gst_element_factory_make ("queue", NULL); g_assert_nonnull (q); conv = gst_element_factory_make (convert_name, NULL); g_assert_nonnull (conv); sink = gst_element_factory_make (sink_name, NULL); g_assert_nonnull (sink); if (g_strcmp0 (convert_name, "audioconvert") == 0) { /* Might also need to resample, so add it just in case. * Will be a no-op if it's not required. */ resample = gst_element_factory_make ("audioresample", NULL); g_assert_nonnull (resample); gst_bin_add_many (GST_BIN (pipe), q, conv, resample, sink, NULL); gst_element_sync_state_with_parent (q); gst_element_sync_state_with_parent (conv); gst_element_sync_state_with_parent (resample); gst_element_sync_state_with_parent (sink); gst_element_link_many (q, conv, resample, sink, NULL); } else { gst_bin_add_many (GST_BIN (pipe), q, conv, sink, NULL); gst_element_sync_state_with_parent (q); gst_element_sync_state_with_parent (conv); gst_element_sync_state_with_parent (sink); gst_element_link_many (q, conv, sink, NULL); } qpad = gst_element_get_static_pad (q, "sink"); ret = gst_pad_link (pad, qpad); g_assert_cmphex (ret, ==, GST_PAD_LINK_OK);}static voidon_incoming_decodebin_stream (GstElement * decodebin, GstPad * pad, GstElement * pipe){ GstCaps *caps; const gchar *name; if (!gst_pad_has_current_caps (pad)) { gst_printerr ("Pad '%s' has no caps, can't do anything, ignoring\n", GST_PAD_NAME (pad)); return; } caps = gst_pad_get_current_caps (pad); name = gst_structure_get_name (gst_caps_get_structure (caps, 0)); if (g_str_has_prefix (name, "video")) { handle_media_stream (pad, pipe, "videoconvert", "waylandsink"); // handle_media_stream (pad, pipe, "videoconvert", "autovideosink"); } else if (g_str_has_prefix (name, "audio")) { handle_media_stream (pad, pipe, "audioconvert", "autoaudiosink"); } else { gst_printerr ("Unknown pad %s, ignoring", GST_PAD_NAME (pad)); }}static voidon_incoming_stream (GstElement * webrtc, GstPad * pad, GstElement * pipe){ GstElement *decodebin; GstPad *sinkpad; if (GST_PAD_DIRECTION (pad) != GST_PAD_SRC) return; decodebin = gst_element_factory_make ("decodebin", NULL); g_signal_connect (decodebin, "pad-added", G_CALLBACK (on_incoming_decodebin_stream), pipe); gst_bin_add (GST_BIN (pipe), decodebin); gst_element_sync_state_with_parent (decodebin); sinkpad = gst_element_get_static_pad (decodebin, "sink"); gst_pad_link (pad, sinkpad); gst_object_unref (sinkpad);}可以看出视频解码使用的decodebin,对于音频管道建立if (g_strcmp0 (convert_name, “audioconvert”) == 0)对比使用了重采样,因为对远程传输而来的音频格式在通用处理。

总结

完成。