机器学习之MATLAB代码--CNN预测 _LSTM预测 (十七)

代码数据结果

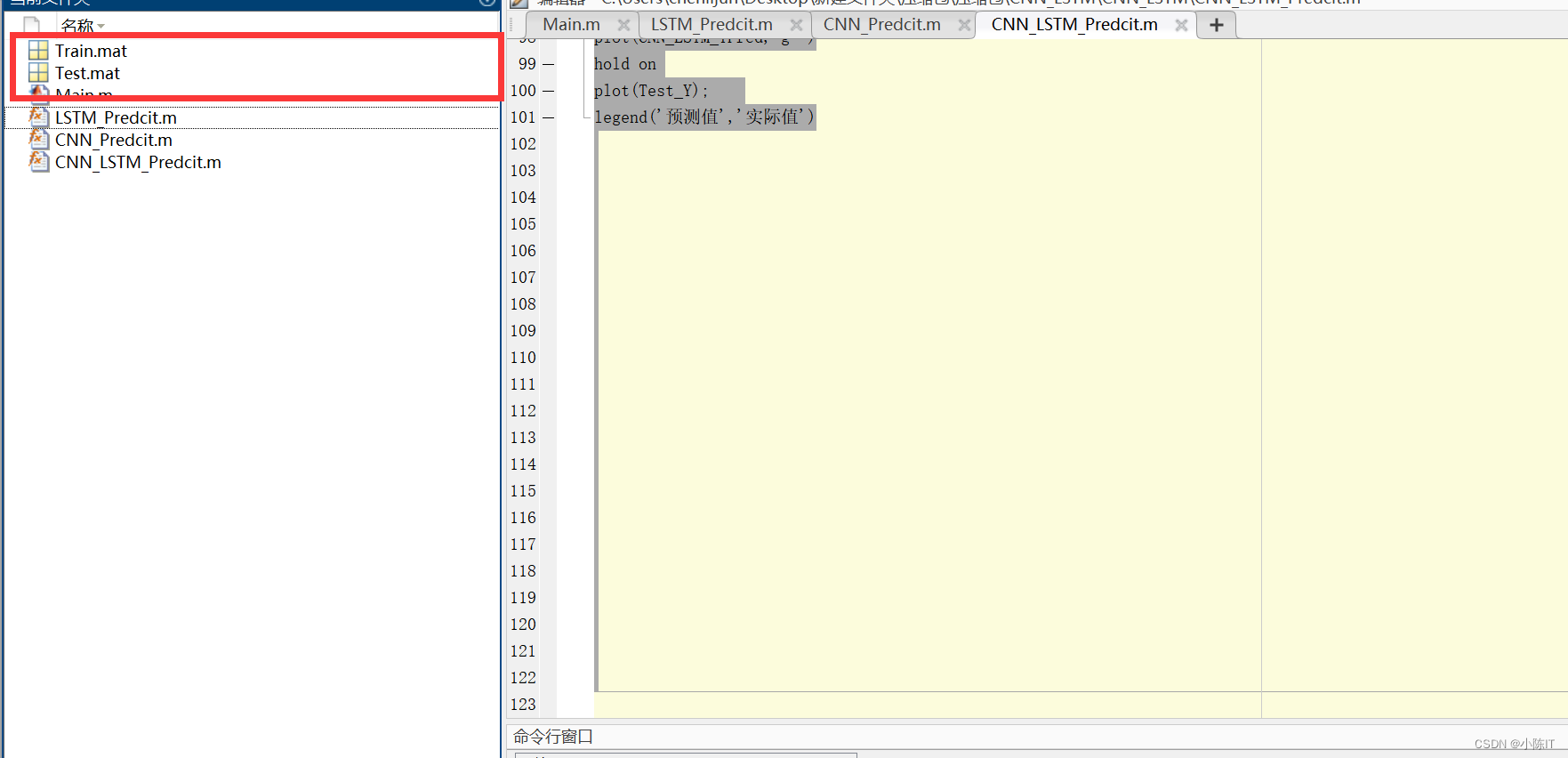

代码

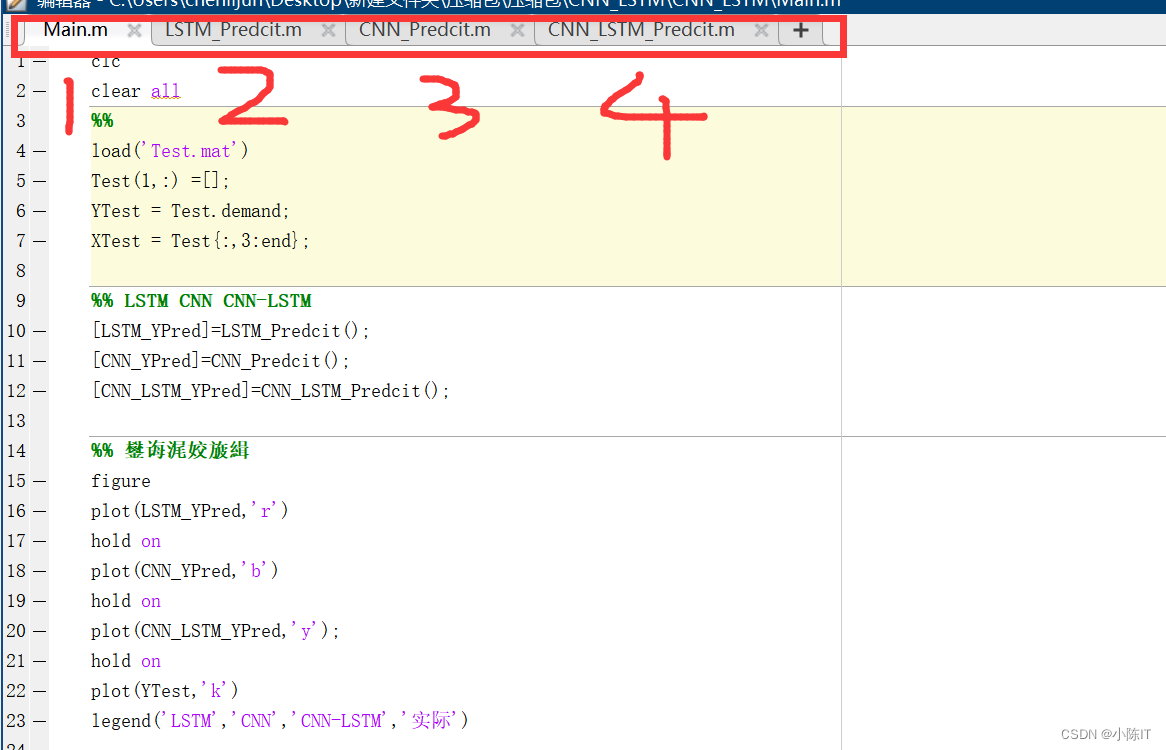

下列代码按照下列顺序依次:

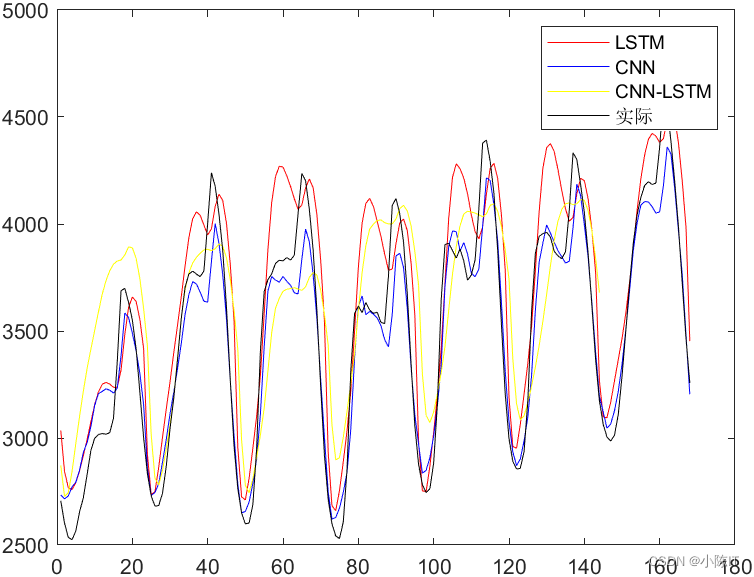

1、

clcclear all%%load('Test.mat')Test(1,:) =[];YTest = Test.demand;XTest = Test{:,3:end};%% LSTM CNN CNN-LSTM [LSTM_YPred]=LSTM_Predcit();[CNN_YPred]=CNN_Predcit();[CNN_LSTM_YPred]=CNN_LSTM_Predcit();%% 鐢诲浘姣旇緝figureplot(LSTM_YPred,'r')hold on plot(CNN_YPred,'b')hold on plot(CNN_LSTM_YPred,'y'); hold onplot(YTest,'k')legend('LSTM','CNN','CNN-LSTM','实际')2、

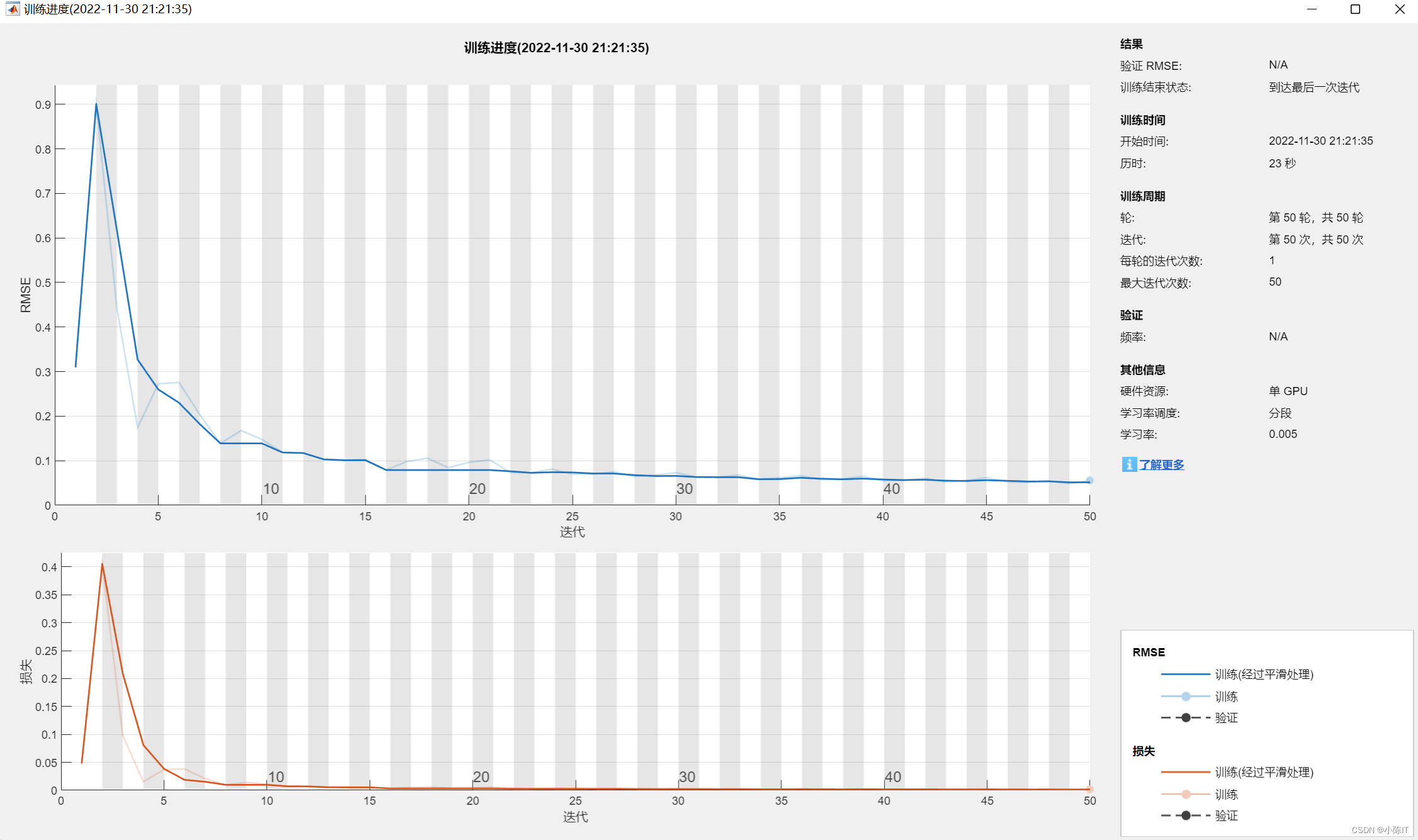

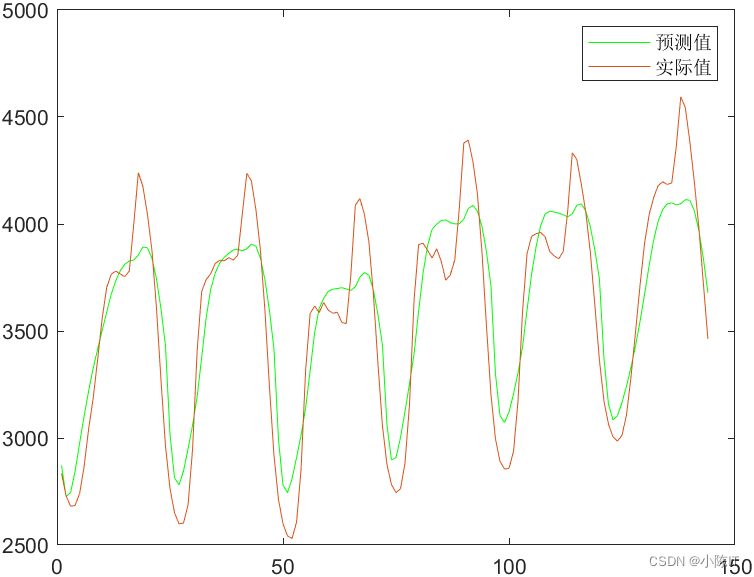

function [LSTM_YPred] = LSTM_Predcit()%% 数据初始化load('Train.mat')Train(1,:) =[];YTrain = Train.demand;XTrain = Train{:,3:end};load('Test.mat')Test(1,:) =[];YTest = Test.demand;XTest = Test{:,3:end};%% 训练集数据归一化XTrain = XTrain';YTrain = YTrain';[XTrainStandardized,Xps_in] = mapminmax(XTrain,0,1);[YTrainStandardized,Yps_out] = mapminmax(YTrain,0,1);%% 创建神经网络layers = [ sequenceInputLayer(6,"Name","input") lstmLayer(128,"Name","gru") dropoutLayer(0.1,"Name","drop") fullyConnectedLayer(1,"Name","fc") regressionLayer("Name","regressionoutput")];options = trainingOptions( 'adam',... 'MaxEpochs',50,... 'GradientThreshold',1,... 'InitialLearnRate',0.005,... 'LearnRateSchedule','piecewise',... 'LearnRateDropPeriod', 125,... 'LearnRateDropFactor' ,0.2,... 'Verbose' , 0,... 'MiniBatchSize',2,... 'Plots','training-progress' );%% 训练网络net= trainNetwork(XTrainStandardized,YTrainStandardized,layers,options);%% 测试集归一化XTest = XTest';XTestStandardized = mapminmax('apply',XTest,Xps_in);%% 多步预测net = predictAndUpdateState(net,XTrainStandardized);numTimeStepsTest = numel(XTest(1,:));LSTM_YPred = [];for i = 1:numTimeStepsTest [net, LSTM_YPred(i)] = predictAndUpdateState(net,XTestStandardized(:,i),'ExecutionEnvironment','cpu');endLSTM_YPred = mapminmax('reverse',LSTM_YPred,Yps_out);%% 多步预测net = predictAndUpdateState(net,XTrainStandardized);numTimeStepsTest = numel(XTest(1,:));LSTM_YPred = [];for i = 1:numTimeStepsTest [net, LSTM_YPred(i)] = predictAndUpdateState(net,XTestStandardized(:,i),'ExecutionEnvironment','cpu');endLSTM_YPred = mapminmax('reverse',LSTM_YPred,Yps_out);%% 结果可视化%绘图figure plot(LSTM_YPred,'g-')hold on plot(Test.demand); legend('预测值','实际值')3、

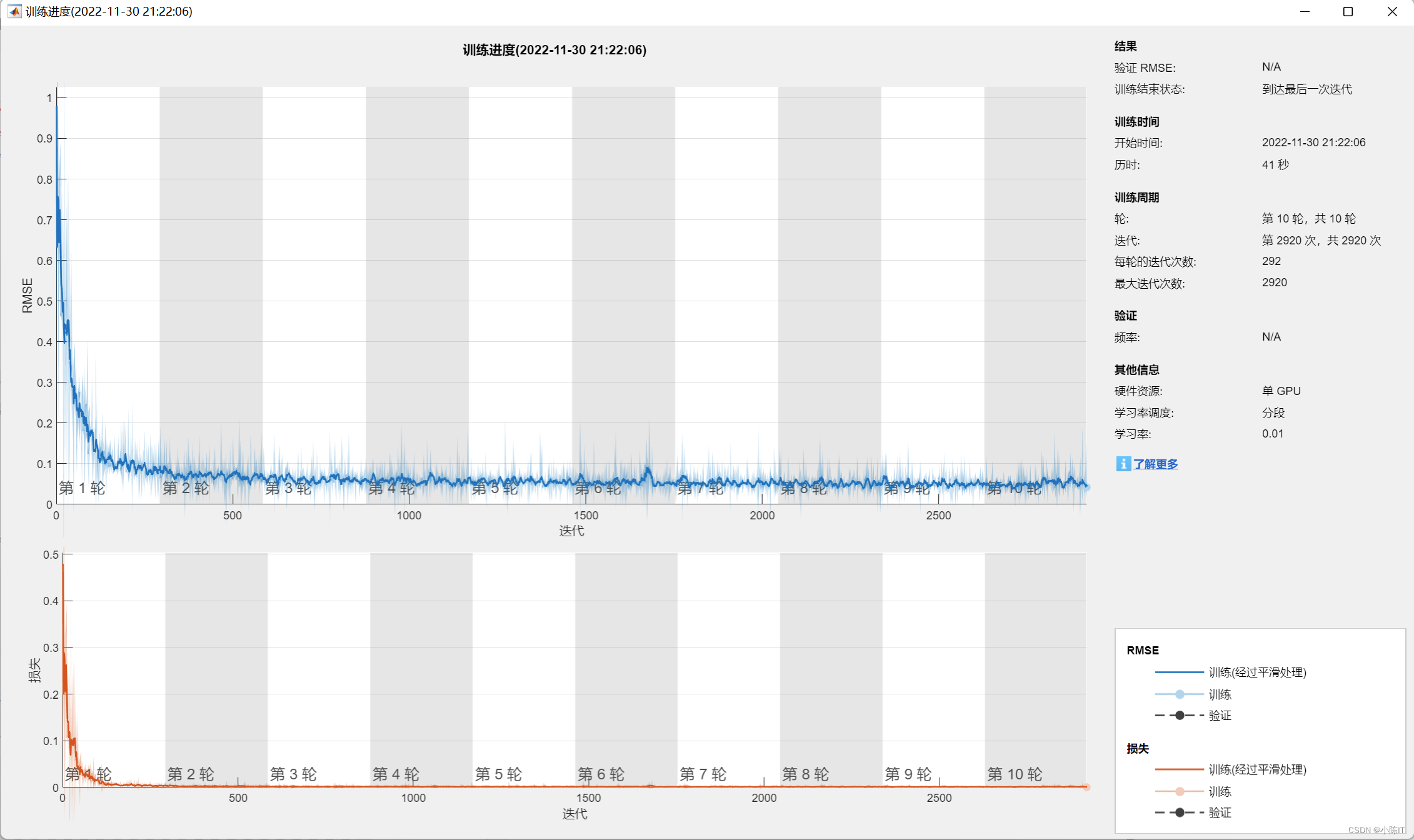

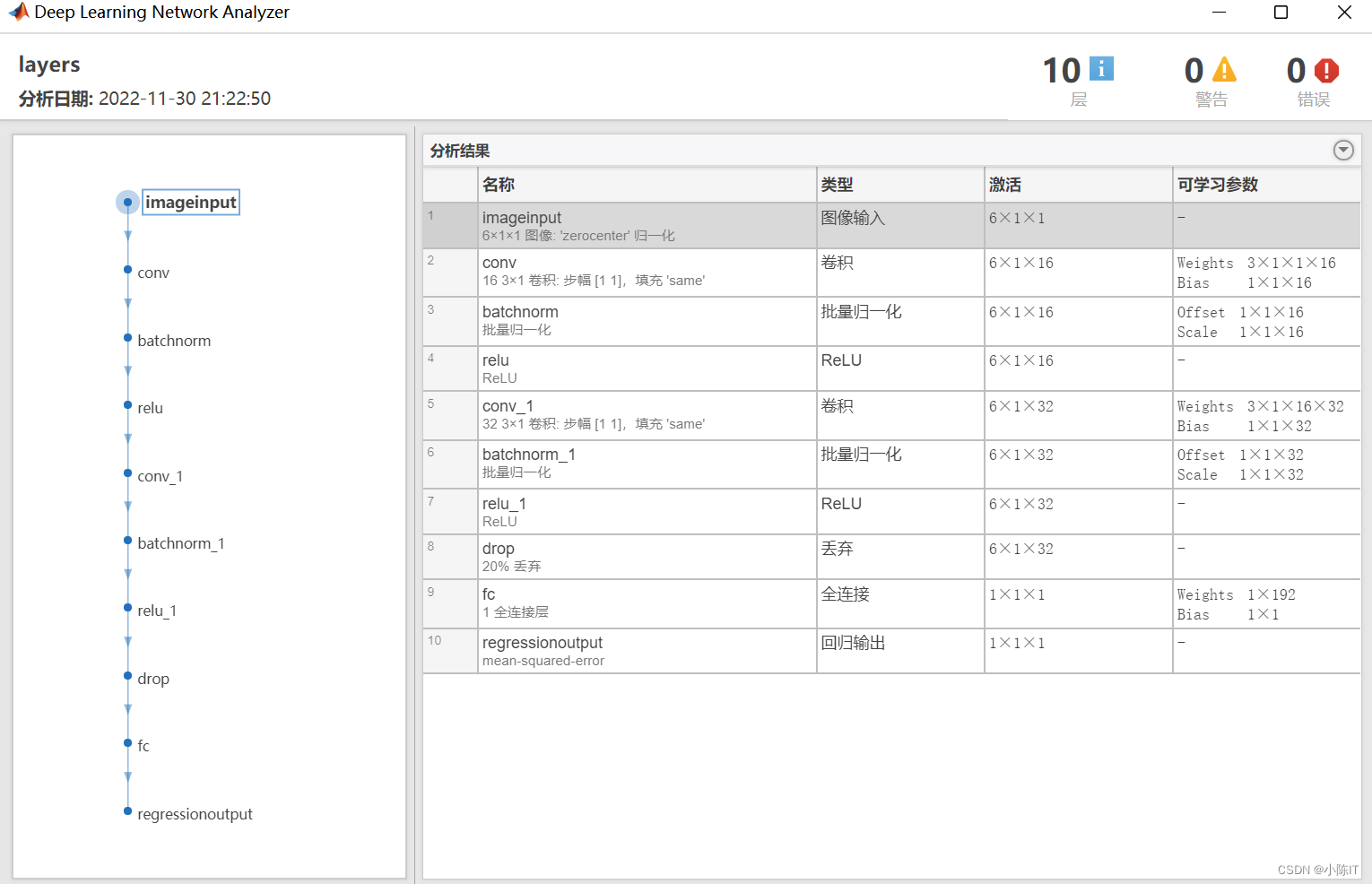

function [CNN_YPred] = CNN_Predcit()%% 数据初始化load('Train.mat')Train(1,:) =[];XTrain = (Train{:,3:end})';YTrain = (Train.demand)';load('Test.mat')Test(1,:) =[];XTest = (Test{:,3:end})';YTest = (Test.demand)';M = size(XTrain,2);N = size(XTest,2);%% 训练集数据归一化[X_train,ps_input] = mapminmax(XTrain,0,1);X_test = mapminmax('apply',XTest,ps_input);[Y_train,ps_output] = mapminmax(YTrain,0,1);Y_test = mapminmax('apply',YTest,ps_output);%% 数据平铺X_train = double(reshape(X_train,6,1,1,M));X_test = double(reshape(X_test,6,1,1,N));Y_train = double(Y_train)';Y_test = double(Y_test)';%% %% 构建网络结构layers = [ imageInputLayer([6 1 1],"Name","imageinput") %输入 convolution2dLayer([3 1],16,"Name","conv","Padding","same") %卷积 batchNormalizationLayer("Name","batchnorm") %归一 reluLayer("Name","relu") %激活 convolution2dLayer([3 1],32,"Name","conv_1","Padding","same") batchNormalizationLayer("Name","batchnorm_1") reluLayer("Name","relu_1") dropoutLayer(0.2,"Name","drop") fullyConnectedLayer(1,"Name","fc") %全连接输出 regressionLayer("Name","regressionoutput")]; %回归%% 参数设置options = trainingOptions( 'sgdm',... %SGDM梯度下降算法 'MiniBatchSize',30,... %批大小,每次训练样本个数30 'MaxEpochs',10,... %最大训练次数800 'InitialLearnRate',0.01,... %初始学习率0.01 'LearnRateSchedule','piecewise',... %学习率下降 'LearnRateDropFactor' ,0.5,... %学习率下降因子 'LearnRateDropPeriod', 400,... %经过400次训练后 学习率0.01*0.5 'Shuffle','every-epoch',... %每次训练打乱数据集 'Plots','training-progress',... %画出曲线 'Verbose',false);%% 训练模型net= trainNetwork(X_train,Y_train,layers,options);%% 模型预测CNN_YPred = predict(net,X_test);%% 数据反归一化CNN_YPred = mapminmax('reverse',CNN_YPred,ps_output);%% 均方根误差error2 = sqrt(sum((CNN_YPred'-Y_test).^2)./N);%% 绘制网路分布图analyzeNetwork(layers)%% 绘图figureplot(1:N, YTest,'r-',1:N,CNN_YPred, 'b','Linewidth', 1)legend('真实值','预测值')xlabel('预测样本')ylabel('预测结果')string = {'测试集预测结果对比';['RMSE=' num2str(error2)]};title(string)xlim([1,N])grid% %%相犬指标计算% %R2% R2 = 1 - norm(T_test - Y_Pred')^2 / norm(T_test - mean(T_test))^2;% disp(['测试集数据的R2为: ', num2str(R2)])% %MAE% mae2 = sum( abs(Y_Pred' - T_test )) ./ N ;% disp( ['测试集数据的MAE为: ', num2str(mae2)])% %MBE% mbe2 = sum( Y_Pred' - T_test ) ./ N ;% disp(['测试集数据的MBE为: ', num2str(mbe2)])figureplot(CNN_YPred)hold onplot(YTest)legend('预测值','实际值')4、

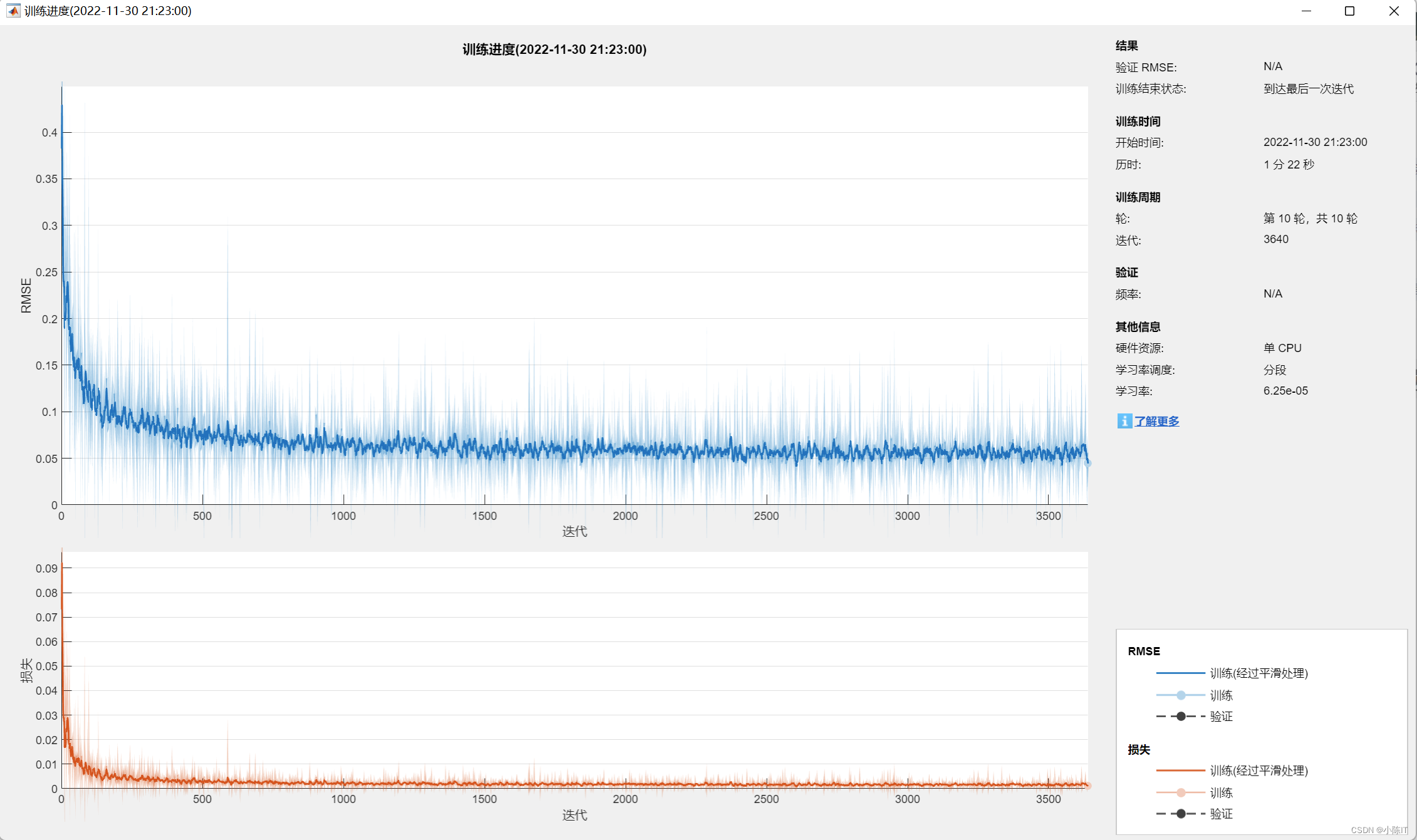

function [CNN_LSTM_YPred] = CNN_LSTM_Predcit()%% 数据集(列为特征,行为样本数目 6特征1输出)clcclear all%load('Train.mat')Train(1,:) =[];XTrain = Train{:,3:end};YTrain = Train.demand;[XTrain_norm,Xopt] = mapminmax(XTrain',0,1);[YTrain_norm,Yopt] = mapminmax(YTrain',0,1);% X = X';k = 24; % 滞后长度% 转换成2-D image(数据集平铺)for i = 1:length(YTrain_norm)-k Train_XNorm{i} = reshape(XTrain_norm(:,i:i+k-1),6,1,1,k); Train_YNorm(i) = YTrain_norm(i+k-1);endTrain_YNorm= Train_YNorm';% load('Test.mat')Test(1,:) =[];YTest = Test.demand;XTest = Test{:,3:end};[XTestnorm] = mapminmax('apply', XTest',Xopt);[YTestnorm] = mapminmax('apply',YTest',Yopt);XTest = XTest';for i = 1:length(YTestnorm)-k Test_XNorm{i} = reshape(XTestnorm(:,i:i+k-1),6,1,1,k); Test_YNorm(i) = YTestnorm(i+k-1); Test_Y(i) = YTest(i+k-1);endTest_YNorm = Test_YNorm';%% LSTM 层设置,参数设置inputSize = size(Train_XNorm{1},1); %数据输入x的特征维度outputSize = 1; %数据输出y的维度 numhidden_units1=50;numhidden_units2= 20;numhidden_units3=100;opts = trainingOptions('adam', ... 'MaxEpochs',10, ... 'GradientThreshold',1,... 'ExecutionEnvironment','cpu',... 'InitialLearnRate',0.001, ... 'LearnRateSchedule','piecewise', ... 'LearnRateDropPeriod',2, ... %2个epoch后学习率更新 'LearnRateDropFactor',0.5, ... 'Shuffle','once',... % 时间序列长度 'SequenceLength',k,... 'MiniBatchSize',24,... 'Plots','training-progress',... 'Verbose',0);%% CNN-LSTMlayers = [ ... sequenceInputLayer([inputSize,1,1],'name','input') %输入层设置 sequenceFoldingLayer('name','fold') convolution2dLayer([2,1],10,'Stride',[1,1],'name','conv1') batchNormalizationLayer('name','batchnorm1') reluLayer('name','relu1') maxPooling2dLayer([1,3],'Stride',1,'Padding','same','name','maxpool') sequenceUnfoldingLayer('name','unfold') flattenLayer('name','flatten') gruLayer(numhidden_units1,'Outputmode','sequence','name','hidden1') dropoutLayer(0.3,'name','dropout_1') lstmLayer(numhidden_units2,'Outputmode','last','name','hidden2') dropoutLayer(0.3,'name','drdiopout_2') fullyConnectedLayer(outputSize,'name','fullconnect') % 全连接层设置(影响输出维度)(cell层出来的输出层) % tanhLayer('name','softmax') regressionLayer('name','output')];lgraph = layerGraph(layers)lgraph = connectLayers(lgraph,'fold/miniBatchSize','unfold/miniBatchSize');%% 网络训练ticnet = trainNetwork(Train_XNorm,Train_YNorm,lgraph,opts);%% 绘制网路分布图analyzeNetwork(layers)%% 测试CNN_LSTM_YPred_norm = net.predict(Test_XNorm);CNN_LSTM_YPred = mapminmax('reverse',CNN_LSTM_YPred_norm',Yopt);CNN_LSTM_YPred = CNN_LSTM_YPred';%%close allfigureplot(CNN_LSTM_YPred,'g-')hold on plot(Test_Y); legend('预测值','实际值')数据

结果

如有需要代码和数据的同学请在评论区发邮箱,一般一天之内会回复,请点赞+关注谢谢!!