k8s安装教程

1 k8s介绍2 环境搭建2.1 主机准备2.2 主机初始化2.2.1 安装wget2.2.2 更换yum源2.2.3 常用软件安装2.2.4 关闭防火墙2.2.5 关闭selinux2.2.6 关闭 swap2.2.7 同步时间2.2.8 修改Linux内核参数2.2.9 配置ipvs功能 2.3 容器安装2.3.1 设置软件yum源2.3.2 安装docker软件2.3.3 配置加速器 2.4 cri环境搭建2.4.1 软件下载2.4.2 安装配置 2.5 k8s环境安装2.5.1 指定k8s源2.5.2 安装kubeadm kubelet kubectl2.5.3 在master节点检查镜像文件列表2.5.4 在master节点上获取镜像文件2.5.5 在master节点初始化2.5.6 在node节点加入master节点2.5.7 网络插件安装

1 k8s介绍

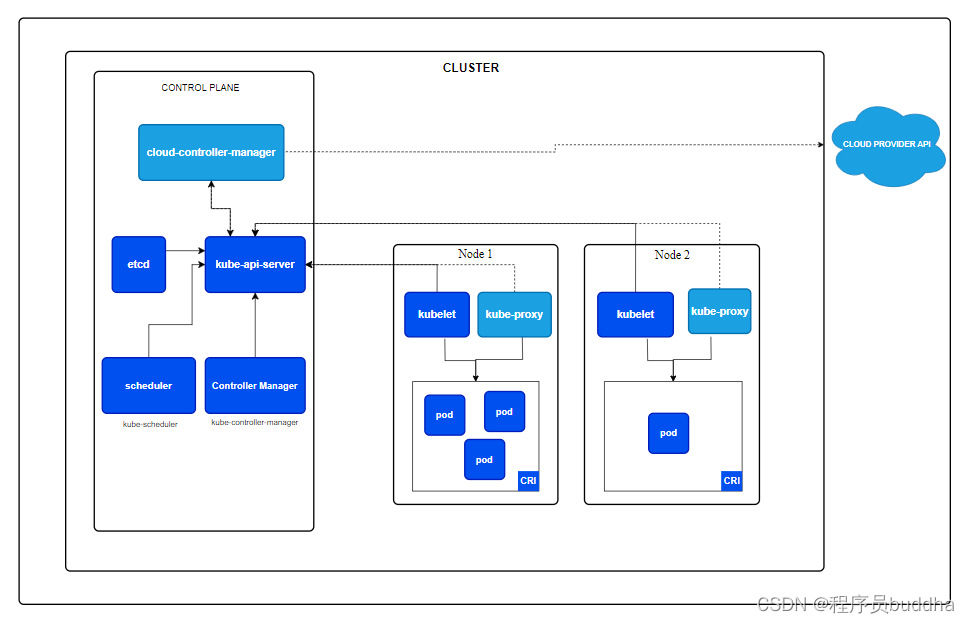

kubernetes,k和s直接是8个字母,简称k8s。是采用Go语言开发的。于2014年9月发布第一个版本,2015年7月发布第一个正式版本。

官网:https://kubernetes.io

代码仓库:https://github.com/kubernetes/kubernetes

kubernetes的本质是一组服务器集群,它可以在集群的每个节点上运行特定的程序,来对节点中的容器进行管理。目的是实现资源管理的自动化,主要提供了如下的主要功能:

自我修复:一旦某一个容器崩溃,能够在1秒中左右迅速启动新的容器弹性伸缩:可以根据需要,自动对集群中正在运行的容器数量进行调整服务发现:服务可以通过自动发现的形式找到它所依赖的服务负载均衡:如果一个服务起动了多个容器,能够自动实现请求的负载均衡版本回退:如果发现新发布的程序版本有问题,可以立即回退到原来的版本存储编排:可以根据容器自身的需求自动创建存储卷

2 环境搭建

这里配一个一主两从的示意图

2.1 主机准备

| 序号 | 主机IP | 配置 | 备注 |

|---|---|---|---|

| 1 | 192.168.123.92 | CPU:4核,内存:4G,硬盘:40GB | k8s-master |

| 2 | 192.168.123.93 | CPU:2核,内存:4G,硬盘:40GB | k8s-node1 |

| 3 | 192.168.123.94 | CPU:2核,内存:4G,硬盘:40GB | k8s-node2 |

# 序号1主机终端操作,操作完毕断开重连hostnamectl set-hostname k8s-master# 序号2主机终端操作,操作完毕断开重连hostnamectl set-hostname k8s-node1# 序号3主机终端操作,操作完毕断开重连hostnamectl set-hostname k8s-node2# 三台主机都需要操作:hosts解析# vim /etc/hosts192.168.123.92 k8s-master192.168.123.93 k8s-node1192.168.123.94 k8s-node22.2 主机初始化

2.2.1 安装wget

yum -y install wget2.2.2 更换yum源

这里是CentOS7操作系统,更换成阿里yum源

# 备份mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.bak# 下载镜像源wget -c http://mirrors.aliyun.com/repo/Centos-7.repo -O /etc/yum.repos.d/CentOS-Base.repo# 重新生成缓存yum clean all && yum makecache2.2.3 常用软件安装

yum -y install vim lrzsz tree2.2.4 关闭防火墙

systemctl stop firewalldsystemctl disable firewalld2.2.5 关闭selinux

# 临时生效setenforce 0# 永久生效# vim /etc/selinux/config# 将SELINUX=的值改成disabled,即:SELINUX=disabled2.2.6 关闭 swap

# 临时关闭swapoff -a# 永久生效# vim /etc/fstab# 将 [/dev/mapper/centos-swap swap swap defaults 0 0] 这一行注释掉2.2.7 同步时间

# 安装yum -y install chrony# vim /etc/chrony.conf 注释下面内容#server 0.centos.pool.ntp.org iburst#server 1.centos.pool.ntp.org iburst#server 2.centos.pool.ntp.org iburst#server 3.centos.pool.ntp.org iburst# 新增阿里时间服务器server ntp.aliyun.com iburst# 保存退出后,设置开机自启,重新加载配置,重启服务systemctl enable chronydsystemctl daemon-reloadsystemctl restart chronyd# 验证是否设置成功# 方式一chronyc sources# 方式二date2.2.8 修改Linux内核参数

cat >> /etc/sysctl.d/k8s.conf << EOF# 内核参数调整vm.swappiness=0# 配置iptables参数,使得流经网桥的流量也经过iptables/netfilter防火墙net.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_forward = 1EOF # 配置生效,加载网桥过滤模块modprobe br_netfiltermodprobe overlay # 重新加载sysctl -p /etc/sysctl.d/k8s.conf# 终端输出如下# vm.swappiness = 0# net.bridge.bridge-nf-call-ip6tables = 1# net.bridge.bridge-nf-call-iptables = 1# net.ipv4.ip_forward = 12.2.9 配置ipvs功能

# 安装ipset和ipvsadmyum install ipset ipvsadm -y # 添加需要加载的模块写入脚本文件cat >> /etc/sysconfig/modules/ipvs.modules << EOF #!/bin/bashmodprobe -- ip_vsmodprobe -- ip_vs_rrmodprobe -- ip_vs_wrrmodprobe -- ip_vs_shmodprobe -- nf_conntrackEOF # 为脚本文件添加执行权限chmod +x /etc/sysconfig/modules/ipvs.modules # 执行脚本文件/bin/bash /etc/sysconfig/modules/ipvs.modules # 查看对应的模块是否加载成功lsmod | grep -e ip_vs -e nf_conntrack_ipv4# 终端输出如下# ip_vs_sh 12688 0 # ip_vs_wrr 12697 0 # ip_vs_rr 12600 0 # ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr# nf_conntrack 139264 1 ip_vs# libcrc32c 12644 3 xfs,ip_vs,nf_conntrack2.3 容器安装

2.3.1 设置软件yum源

# yum-config-manager命令能用,需要安装的依赖yum install -y yum-utils device-mapper-persistent-data lvm2# 新增docker阿里镜像源yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo2.3.2 安装docker软件

# 这里不指定版本,安装的是最新版dockeryum install -y docker-ce2.3.3 配置加速器

cat >> /etc/docker/daemon.json << EOF{"registry-mirrors": [ "https://afnsj47q.mirror.aliyuncs.com" ], # 私有仓配置 # "insecure-registries": ["域名或ip"], "exec-opts": ["native.cgroupdriver=systemd"]}EOF[root@k8s-master ~]# cat /etc/docker/daemon.json {"registry-mirrors": [# 个人阿里云加速器配置 "https://afnsj47q.mirror.aliyuncs.com" ], # 私有仓配置 # "insecure-registries": ["域名或ip"], "exec-opts": ["native.cgroupdriver=systemd"]}# 重启加载配置,设置开机自启并开启docker服务systemctl daemon-reloadsystemctl enable dockersystemctl start docker2.4 cri环境搭建

2.4.1 软件下载

软件地址:https://github.com/Mirantis/cri-dockerd/releases

# 这里下载的是cri最新版本wget -c https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.8/cri-dockerd-0.3.8.amd64.tgz2.4.2 安装配置

# 解压下载文件tar -xvf cri-dockerd-0.3.8.amd64.tgz -C /usr/localmv /usr/local/cri-dockerd/cri-dockerd /usr/local/bincri-dockerd --version# cri-dockerd交给systemd管理的配置文件cat > /etc/systemd/system/cri-dockerd.service<<-EOF[Unit]Description=CRI Interface for Docker Application Container EngineDocumentation=https://docs.mirantis.comAfter=network-online.target firewalld.service docker.serviceWants=network-online.target [Service]Type=notifyExecStart=/usr/local/bin/cri-dockerd --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9 --network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin --container-runtime-endpoint=unix:///var/run/cri-dockerd.sock --cri-dockerd-root-directory=/var/lib/dockershim --docker-endpoint=unix:///var/run/docker.sock --cri-dockerd-root-directory=/var/lib/dockerExecReload=/bin/kill -s HUP $MAINPIDTimeoutSec=0RestartSec=2Restart=alwaysStartLimitBurst=3StartLimitInterval=60sLimitNOFILE=infinityLimitNPROC=infinityLimitCORE=infinityTasksMax=infinityDelegate=yesKillMode=process[Install]WantedBy=multi-user.targetEOF cat > /etc/systemd/system/cri-dockerd.socket <<-EOF[Unit]Description=CRI Docker Socket for the APIPartOf=cri-docker.service[Socket]ListenStream=/var/run/cri-dockerd.sockSocketMode=0660SocketUser=rootSocketGroup=docker[Install]WantedBy=sockets.targetEOF# 加载配置,设置开启自启并启动服务systemctl daemon-reloadsystemctl enable cri-dockerdsystemctl start cri-dockerd2.5 k8s环境安装

2.5.1 指定k8s源

这里使用阿里的yum源

cat > /etc/yum.repos.d/kubernetes.repo << EOF[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF2.5.2 安装kubeadm kubelet kubectl

# 安装kubeadm kubelet kubectlyum -y install kubeadm kubelet kubectl# 设置kubelet开机自启和启动systemctl enable kubelet && systemctl restart kubelet2.5.3 在master节点检查镜像文件列表

[root@k8s-master ~]# kubeadm config images listI1220 18:09:30.152344 26378 version.go:256] remote version is much newer: v1.29.0; falling back to: stable-1.28registry.k8s.io/kube-apiserver:v1.28.4registry.k8s.io/kube-controller-manager:v1.28.4registry.k8s.io/kube-scheduler:v1.28.4registry.k8s.io/kube-proxy:v1.28.4registry.k8s.io/pause:3.9registry.k8s.io/etcd:3.5.9-0registry.k8s.io/coredns/coredns:v1.10.12.5.4 在master节点上获取镜像文件

# cat images.sh #!/usr/bin/env bashimages=$(kubeadm config images list --kubernetes-version=1.28.4 | awk -F'/' '{print $NF}')for i in ${images}do docker pull registry.aliyuncs.com/google_containers/$i docker tag registry.aliyuncs.com/google_containers/$i registry.k8s.io/$i docker rmi registry.aliyuncs.com/google_containers/$idone下载后一个不满足,需要再手动修改下

docker tag registry.k8s.io/coredns:v1.10.1 registry.k8s.io/coredns/coredns:v1.10.1docker rmi registry.k8s.io/coredns:v1.10.1# kubeadm config images list 和 docker images保持一致了[root@centos ~]# kubeadm config images listI1215 08:55:02.856427 14692 version.go:256] remote version is much newer: v1.29.0; falling back to: stable-1.28registry.k8s.io/kube-apiserver:v1.28.4registry.k8s.io/kube-controller-manager:v1.28.4registry.k8s.io/kube-scheduler:v1.28.4registry.k8s.io/kube-proxy:v1.28.4registry.k8s.io/pause:3.9registry.k8s.io/etcd:3.5.9-0registry.k8s.io/coredns/coredns:v1.10.1[root@centos ~]# docker imagesREPOSITORY TAG IMAGE ID CREATED SIZEregistry.k8s.io/kube-apiserver v1.28.4 7fe0e6f37db3 4 weeks ago 126MBregistry.k8s.io/kube-controller-manager v1.28.4 d058aa5ab969 4 weeks ago 122MBregistry.k8s.io/kube-scheduler v1.28.4 e3db313c6dbc 4 weeks ago 60.1MBregistry.k8s.io/kube-proxy v1.28.4 83f6cc407eed 4 weeks ago 73.2MBregistry.k8s.io/etcd 3.5.9-0 73deb9a3f702 7 months ago 294MBregistry.k8s.io/coredns/coredns v1.10.1 ead0a4a53df8 10 months ago 53.6MBregistry.k8s.io/pause 3.9 e6f181688397 14 months ago 744kB2.5.5 在master节点初始化

# 直接初始化kubeadm init --kubernetes-version=1.28.4 \--node-name=k8s-master \--apiserver-advertise-address=192.168.123.92 \--image-repository registry.k8s.io \--service-cidr=10.96.0.0/12 \--pod-network-cidr=10.244.0.0/16 \--cri-socket=unix:///var/run/cri-dockerd.sock# 重置并初始化kubeadm reset -f --cri-socket=unix:///var/run/cri-dockerd.sock && kubeadm init --kubernetes-version=1.28.4 \--node-name=k8s-master \--apiserver-advertise-address=192.168.123.92 \--image-repository registry.aliyuncs.com/google_containers \--service-cidr=10.96.0.0/12 \--pod-network-cidr=10.244.0.0/16 \--cri-socket=unix:///var/run/cri-dockerd.sockmaster节点初始化成功,终端显示

To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.123.92:6443 --token jy6fvz.3zf24v9sb5f4dto2 \--discovery-token-ca-cert-hash sha256:d0d61ece9c05b8ffcc4b246dfedf139dab7a701618e11621934575844216aee4跟进终端提示,主节点操作

mkdir -p $HOME/.kube && cp -i /etc/kubernetes/admin.conf $HOME/.kube/config && chown $(id -u):$(id -g) $HOME/.kube/config2.5.6 在node节点加入master节点

node节点加入主节点,在其node节点运行

# 直接加入kubeadm join 192.168.123.92:6443 --token jy6fvz.3zf24v9sb5f4dto2 \--discovery-token-ca-cert-hash sha256:d0d61ece9c05b8ffcc4b246dfedf139dab7a701618e11621934575844216aee4 \--cri-socket=unix:///var/run/cri-dockerd.sock# 重置并加入kubeadm reset -f --cri-socket=unix:///var/run/cri-dockerd.sock && kubeadm join 192.168.123.92:6443 --token jy6fvz.3zf24v9sb5f4dto2 \--discovery-token-ca-cert-hash sha256:d0d61ece9c05b8ffcc4b246dfedf139dab7a701618e11621934575844216aee4 \--cri-socket=unix:///var/run/cri-dockerd.sock重新生成加入节点token

kubeadm token create --print-join-command设置kubectl命令可以在node节点操作

scp -r $HOME/.kube 192.168.123.93:$HOME/至此kubeadm命令工作完成,kubectl命令开始工作

2.5.7 网络插件安装

只在master节点操作即可

安装网络插件kube-flannel

# 打开https://site.ip138.com/网站查询raw.githubusercontent.com网址的域名解析,然后配置到/etc/hosts# 521github.com# 用wget命令下载kube-flannel.yml文件wget --no-check-certificat https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml# 主节点执行kubectl apply -f kube-flannel.yml安装网络插件calico

官方网址:https://docs.tigera.io/

# 打开https://site.ip138.com/网站查询raw.githubusercontent.com网址的域名解析,然后配置到/etc/hosts# 下载calico.yaml# https://docs.tigera.io/calico/latest/getting-started/kubernetes/self-managed-onprem/onpremiseswget --no-check-certificat https://raw.githubusercontent.com/projectcalico/calico/v3.27.0/manifests/calico.yaml# 官方提供的yaml文件中,ip识别策略(IPDETECTMETHOD)没有配置,即默认为first-found,这会导致一个网络异常的ip作为nodeIP被注册,从而影响node-to-nodemesh。# 解决方法vim calico.yaml:/CLUSTER_TYPE# 在下添加:- name: IP_AUTODETECTION_METHOD value: "interface=ens192" # 192为Linux执行ip a或ifconfig的名称 # 验证kubectl get po -n kube-system至此,k8s最新版集群环境搭建完毕