LocalAI 部署

介绍

LocalAI 是免费的开源 OpenAI 替代方案。 LocalAI 充当 REST API 的直接替代品,与本地推理的 OpenAI API 规范兼容。 它无需 GPU,还有多种用途集成,允许您使用消费级硬件在本地或本地运行 LLM、生成图像、音频等等,支持多个模型系列。

启动方式

1. Linux AMD64 docker 启动

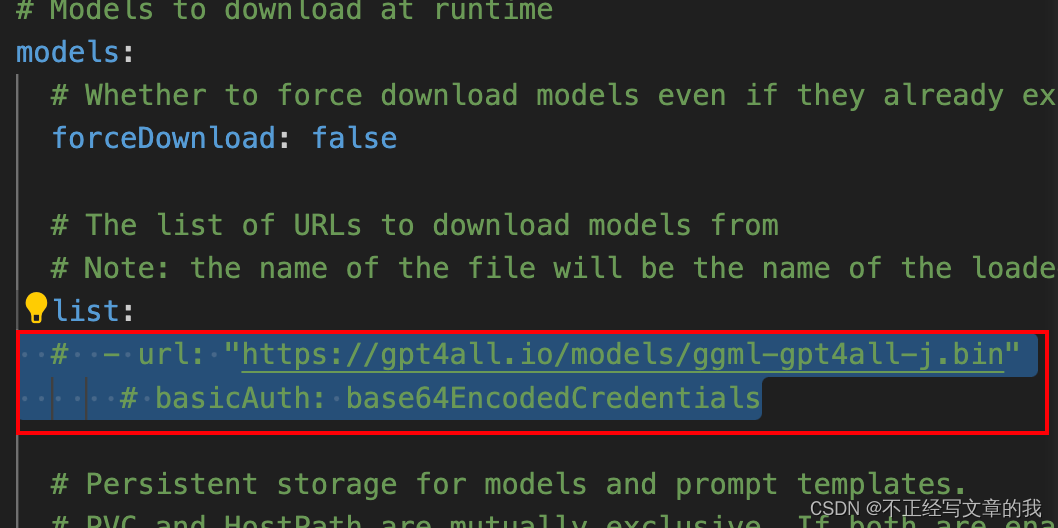

helm repo add go-skynet https://go-skynet.github.io/helm-charts/helm search repo go-skynethelm pull go-skynet/local-aitar -xvf local-ai-3.1.0.tgz && cd local-aivim value.yaml# 取消下面截图的注释

helm install --create-namespace local-ai . -n local-ai -f values.yaml2. Mac M2 手动启动

# install build dependenciesbrew install abseil cmake go grpc protobuf wget# clone the repogit clone https://github.com/go-skynet/LocalAI.gitcd LocalAI# build the binarymake build# make BUILD_TYPE=metal build## Set `gpu_layers: 1` to your YAML model config file and `f16: true`## Note: only models quantized with q4_0 are supported!# Download gpt4all-j to models/wget https://gpt4all.io/models/ggml-gpt4all-j.bin -O models/ggml-gpt4all-j# Use a template from the examplescp -rf prompt-templates/ggml-gpt4all-j.tmpl models/# Run LocalAI./local-ai --models-path=./models/ --debug=true使用

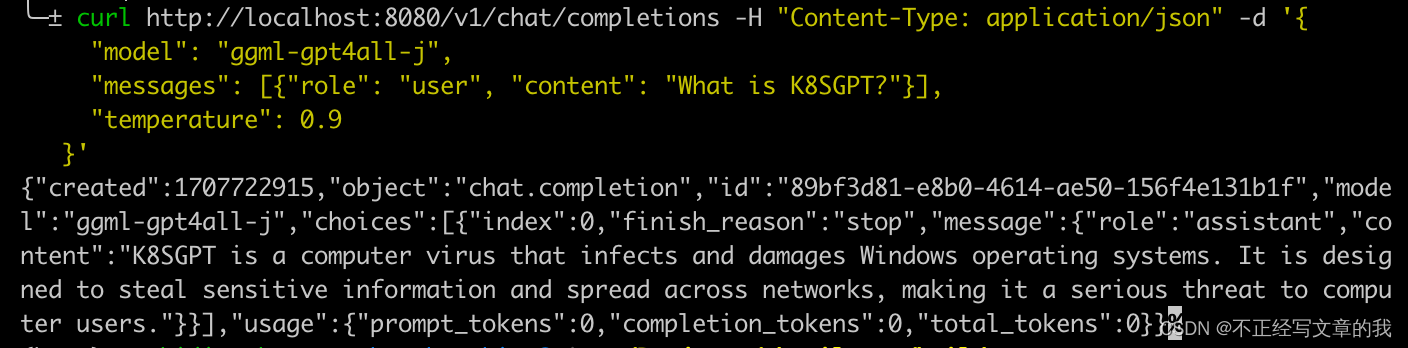

# Now API is accessible at localhost:8080curl http://localhost:8080/v1/modelscurl http://localhost:8080/v1/chat/completions -H "Content-Type: application/json" -d '{ "model": "ggml-gpt4all-j", "messages": [{"role": "user", "content": "How are you?"}], "temperature": 0.9 }'

官方编译启动文档

FQA

Q1: 编译报错日志 sources/go-llama/llama.go:372:13: undefined: min

binding.cpp:333:67: warning: format specifies type 'size_t' (aka 'unsigned long') but the argument has type 'int' [-Wformat]binding.cpp:809:5: warning: deleting pointer to incomplete type 'llama_model' may cause undefined behavior [-Wdelete-incomplete]sources/go-llama/llama.cpp/llama.h:60:12: note: forward declaration of 'llama_model'# github.com/go-skynet/go-llama.cppsources/go-llama/llama.go:372:13: undefined: minnote: module requires Go 1.21make: *** [backend-assets/grpc/llama] Error 1需要使用 go 1.21 版本

brew install mercurial# 安装 gvmbash < <(curl -s -S -L https://raw.githubusercontent.com/moovweb/gvm/master/binscripts/gvm-installer)# gvm直接生效source ~/.gvm/scripts/gvm# 查看版本gvm install go1.21.7gvm use go1.21.7Q2: The link interface of target “protobuf::libprotobuf” contains: absl::absl_check, but the target was not found

CMake Error at /opt/homebrew/lib/cmake/protobuf/protobuf-targets.cmake:71 (set_target_properties): The link interface of target "protobuf::libprotobuf" contains: absl::absl_check but the target was not found. Possible reasons include: * There is a typo in the target name. * A find_package call is missing for an IMPORTED target. * An ALIAS target is missing.Call Stack (most recent call first): /opt/homebrew/lib/cmake/protobuf/protobuf-config.cmake:16 (include) examples/grpc-server/CMakeLists.txt:34 (find_package)需要更新一下 protobuf 和 abseil 版本

brew uninstall protobuf abseilsudo port install re2 grpc abseil